The Misuse of Artificial Intelligence

The rapid advancement of Artificial Intelligence (AI) has unlocked seemingly limitless automation possibilities, and pushed the boundaries of what machines can achieve.

However, AI presents us with substantial risks to society at large if it is misused. For example, experts have raised concerns about the ways AI can empower malicious actors with new tools for nefarious purposes such as fraud, theft, cyberattacks, or even the construction of nuclear or biological weapons.

In this article, we provide an overview of malicious AI use and explore what measures can be taken to safeguard against unethical, unlawful, and abusive applications of AI.

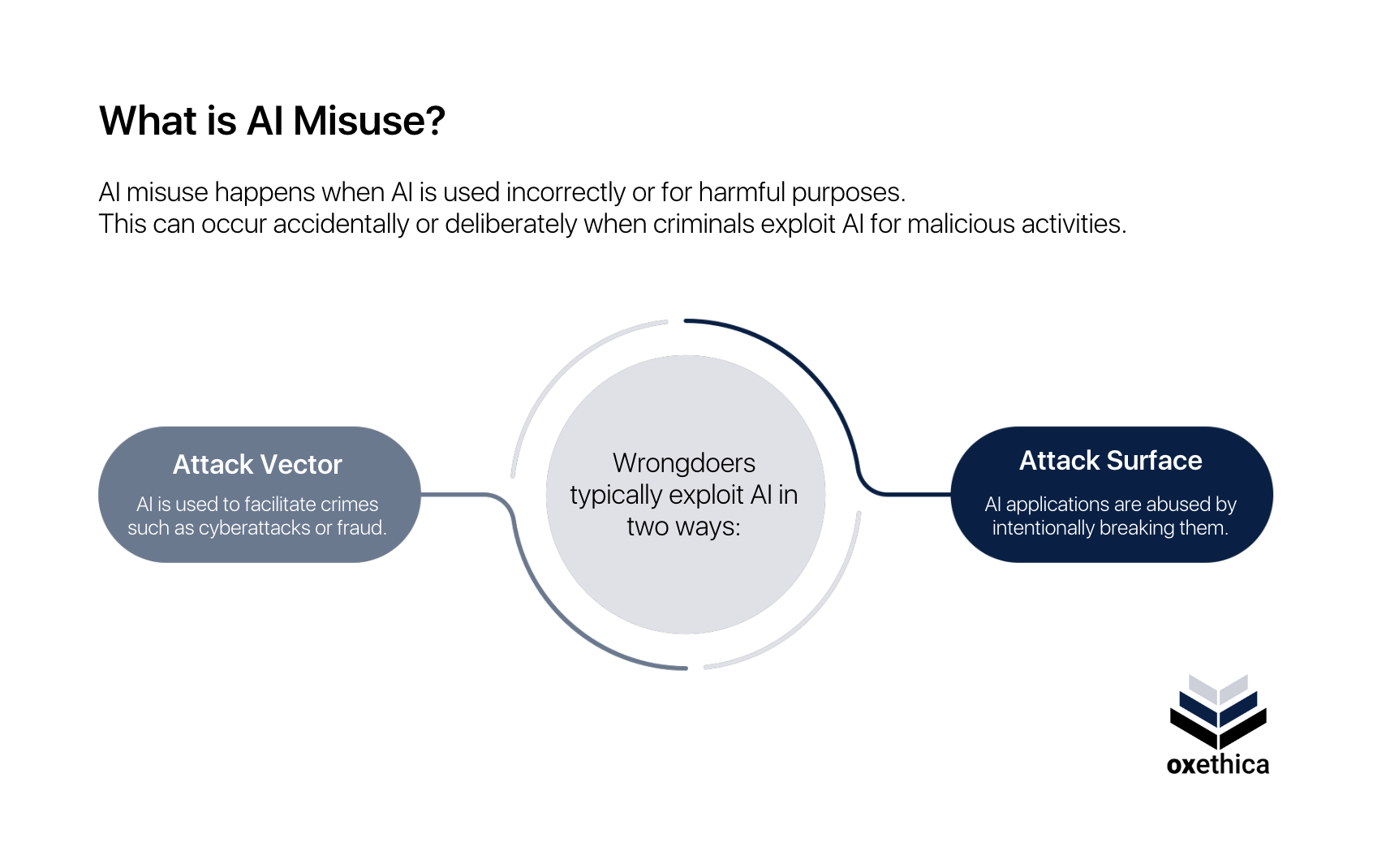

What Is AI Misuse?

In short, AI misuse occurs when AI systems are used in the wrong way or for the wrong purpose. The former often occurs by accident, by misunderstanding AI capabilities and risks, while the latter typically involves wrongdoers exploiting AI to cause harm or commit crimes. Particularly, this occurs in either of two ways:

- Attack vector: They may use AI technologies as tools to facilitate crimes such as automated cyberattacks, fraud or identity theft.

- Attack surface: They may abuse existing AI applications by attacking them or breaking them intentionally such as hacking into smart home assistants like Amazon’s Alexa.

Why is AI Vulnerable to Misuse?

Automation at Scale

AI models have advanced rapidly, enabling the automation of complex generative tasks that once required specialised expertise. This includes potential misuse in areas like cybersecurity, where large language models can assist malicious actors in creating malware, even with minimal technical knowledge.

Open Access

Freely available AI models like ChatGPT and Claude, along with open-source algorithms, have made AI available to the masses. This unprecedented availability allows individuals with minimal technical expertise to exploit AI's powerful capabilities.

Lack of Homogenous Regulation

At present, lawmakers are struggling to keep pace with the rapid advancements in AI development. Although legal frameworks such as the EU AI Act or the New York Algorithmic Hiring Law have recently come into effect, there is no unified global standard for safety and ethics in AI, unlike other high-risk technologies. This gap gives malicious actors a window of opportunity to exploit AI's capabilities before regulations are globally homogenised.

Examples of AI Misuse

The misuse of AI can have severe consequences for society at large. Small-scale fraud and scams do not only come at financial losses, but also at the expense of individuals' well-being. On a large-scale, intentional misuse of AI could destabilise democracy, erode trust in authorities, or potentially empower terrorists with sophisticated arms and destabilising tactics.

Weapons

Malicious use of AI for arms and weaponry already poses a considerable risk source that shouldn’t be relegated to Sci-Fi or far-off futures. With further improvements on AI’s reasoning and mathematical abilities, AI can become a powerful tool that can create blueprints for all types of weaponry. Moreover, with the rise of autonomous vehicles and drones malicious actors gain possibilities to manipulate commercial systems for destruction or build autonomous weapon systems lacking in human control.

Example:

Consider the latest AI models recently presented by OpenAI. Recently, the company stated that their o1 AI models have been evaluated to pose a “medium risk” for facilitating the construction of nuclear, biological, and radiological weapons.

Although OpenAIs chief technological Officer, Mira Murati, claims that the new model outperforms previous ones on safety metrics and doesn’t introduce risks beyond what is already possible, the advancements in AI models’ reasoning, scientific and mathematical skills should seriously alert industry and politics to instate appropriate guardrails and limits.

Rogue AI

Some experts expressed concerns about unprecedented emergent abilities of AI. The worry about emergent abilities is that with increasing complexity and intelligence, AI may become unpredictable and thus able to operate autonomously. The potential for unpredictability and autonomy could make AI itself a weapon: Malicious actors may create or reprogramme AI with the intention to unleash it as a rogue agent to pursue dangerous goals.

Example:

Rogue AI agents may appear as an unlikely scenario at the moment, but the open-source project ChaosGPT has already demonstrated the gruesome risk potential. The developers created ChaosGPT as an autonomous version of ChatGPT through bypassing its security filters and prompted the AI with commands such as "establish global dominance" and "destroy humanity."

In response, the AI began gathering information on weapons of mass destruction, started a Twitter campaign against humans, and even tried to manipulate ChatGPT into becoming its evil sidekick. However, ChaosGPT lacked crucial survival skills and was unable to formulate or carry through with long-term plans.

AI Malware

AI has serious implications for cybersecurity. Cybercriminals can harness AI to enhance the effectiveness of malware, making it more efficient, less predictable, and harder to detect. AI driven attacks can identify vulnerabilities in computer systems, adapt in real-time, and spread malware with greater speed and accuracy.

Moreover, AI can generate code and automate a wide range of hacking techniques, including password guessing, captcha breaking, and even bypassing AI-based security and detection systems, elevating the sophistication and scale of potential cyberattacks.

Example:

In 2018, researchers at IBM presented DeepLocker, a unique form of AI-powered malware that uses deep neural networks to conceal its attack, to demonstrate the new possibilities of AI for cybercrimes. DeepLocker can hide malicious code in unassuming software and only activates when specific "trigger conditions" are met, such as visual, audio, geolocation, or system features. This exploits the “black box” nature of deep neural networks, meaning that the malware’s trigger conditions are encoded into a complex network that is almost impossible to decode, reverse engineer, and predict its behaviour.

Advanced Scams

Generative AI models can generate text in natural and programming languages, produce high-quality images and videos of realistic or artistic form. This enables a myriad of possibilities for impersonation, from personalised and targeted spam emails with data scraped from social media, to speech synthesis or so-called deepfakes to convincingly impersonate someone’s identity. AI potentiates the efficiency of social engineering attacks through its generative abilities learning from all kinds of data available (usually non-consensually provided) to recreate a convincing identity online.

Example:

In February 2024, an employee of a multinational company in Hong Kong transferred 25 million USD to scammers who staged a video conference exploiting deepfake technology. The scammers successfully digitally manipulated videos to appear as familiar colleagues of the target, such as the company’s chief financial officer. Similarly, deepfakes misused to create fake pornographic images and videos of celebrities and South-Korean university students have become a large scale issue concerning blackmail, abuse, harassment, and defamation.

Manipulation and Disinformation

Similarly, generative AI can be exploited to disseminate disinformation. Impersonation through deepfakes can be utilised to launch disinformation campaigns delegitimizing authoritative figures or manipulating public opinion by posing as politicians or scientists.

Consequently, public trust in authorities may be strategically diminished through casting insecurity over the trustworthiness of sources. So-called persuasive AI can contaminate the landscape of information with strategic lies by exploiting the trust of users in the systems they are using or sources they are consulting.

Example:

In January 2024, a political consultant commissioned a robocall going out to thousands of voters, impersonating Joe Biden and discouraging them from voting in the state of New Hampshire primary elections. More recently, AI generated documents and images have been circulating on social media falsely portraying Democratic presidential candidate Kamala Harris as a communist, supporting Republican presidential candidate Donald Trump’s smear campaign against his opponent.

Authoritarian Governments and War Crimes

AI may also be misused by irresponsible and authoritarian governments or other political actors to seize or maintain power with the help of sophisticated technological tools. Next to propaganda or disinformation campaigns, AI may be misused for surveillance, social scoring, and profiling in order to control a population and dismantle democratic rule and civil liberties.

In this case, AI eliminates the need for loyal or indoctrinated functionaries to maintain totalitarian and authoritarian structures and could consequently make them more resilient. In more current scenarios, AI misuse in warfare can arise through irresponsible automation and unlawful processing of sensitive population data, blurring hierarchies of responsibility and potentially increasing lethal outcomes.

Example:

The Israel Defence Forces make strategic use of an AI based targeting system processing biometric data that has led to the death of Palestinian civilians in the attempt to target members of Hamas in bombings. Overall, the high death toll and scale of destruction in Gaza may partly be due to unethical AI powered automation of warfare.

Unintentional Misuse

AI misuse can happen accidentally, even without harmful intent. This often stems from a lack of awareness about AI risks and neglect of ethical principles during development. To learn more about potential AI dangers, see our article on the topic.

Example:

Consider healthcare companies who use AI to generate clinical study reports. While the aim is to reduce costs, optimise resources, and to improve accuracy, overreliance on AI in healthcare could lead to severe outcomes, such as the approval of unsafe medications, posing significant risks to public health and safety.

Moreover, companies face considerable liability if their AI-driven systems inadvertently lead to harmful products entering the market. Thus, the application of AI needs to be considered carefully, especially in high-risk sectors such as healthcare, education, military, and biometric identification.

Barriers to Preventing AI Misuse: Open Source Models

Open-source AI models can make AI development more accessible and transparent, but they also carry unique risks compared to closed-source alternatives. Current misuse prevention methods don't work well for open-source AI models. Users can easily disable built-in safety features when running these models on their own machines. 'Jailbreaking' techniques can also bypass safeguards in online AI models. While using an Application Programming Interface (API) can help reduce misuse risks, it cannot completely prevent them. However, developers are continuously working on improving the fairness and safety of their open-source models and have succeeded in putting various guardrails into place for misuse and abuse.

How can AI Misuse be Prevented?

Although malicious actors will always find ways to cause harm, implementing both proactive and responsive measures can reduce the likelihood and impact of AI misuse.

Education and AI Literacy

Advancing technological education and AI literacy can build resilience to AI powered scams, impersonation- and disinformation attempts. Education empowers the public to identify AI generated content, avoid the unwitting dissemination of fake content, and protect themselves from AI driven scams.

Global Laws, Regulations and Ethical Guidelines

Establishing global standards for AI safety, ethics, and application is crucial to combating its misuse. Despite efforts like the EU AI Act, more work is needed to safeguard against harmful innovations. While criminal activity cannot be fully eliminated, strong legislation and clear ethical regulations can discourage and reduce intentional and unintentional misuse of AI systems.

Safety Culture, Cybersecurity, and Technical Robustness

Instating a sustainable safety culture within organisations developing and deploying AI and investing in cybersecurity and technical robustness is essential against AI misuse. This can include some of the following techniques:

- Adversarial Training: Rigorous adversarial training exposes weak-points and can waterproof an AI system towards attacks.

- Machine Unlearning: This technique can ensure AI remains controllable in the eventuality of unpredictable emergent abilities.

- Human-in-the-loop: Implementing people into various stages of the AI cycle strengthens human oversight.

In A Nutshell

What is AI Misuse?

AI misuse occurs when AI systems are used in the wrong way or for the wrong purpose. The former often occurs by accident, by misunderstanding AI capabilities and risks, while the latter typically involves wrongdoers exploiting AI to cause harm or commit crimes.

What are Examples of AI Misuse?

AI can automate and amplify various forms of misuse:

- Malware and Scams: Cybercriminals can use machine learning to create more sophisticated, harder-to-detect malware for scams, leveraging advanced social engineering techniques.

- Disinformation and Deepfakes: Persuasive AI and deepfakes can convincingly contaminate the landscape of information with strategic lies to delegitimize authoritative figures and to manipulate public opinion and trust.

- Automated Warfare and Weapons: AI powered weapons that make independent targeting decisions can threaten the lives of civilians and blur the hierarchies of responsibility for war crimes. As AI technology advances, it could be used to develop weapons of mass destruction or operate as a rogue agent, threatening global stability.

- Unintentional Misuse: Overreliance on AI or a lack of ethical considerations can produce discriminatory outcomes or fundamental rights abuses.

How can the Misuse of AI be Prevented?

While malicious actors will continue to exploit AI, responsive and preventative measures can reduce its misuse. Key strategies include education and AI literacy campaigns, sustainable, robust, and coherent global laws and ethical guidelines, enhancing cybersecurity and safety cultures in organisations, and investing in technical robustness of AI systems.

Eliminating AI Misuse with oxethica

We at oxethica can provide you with the necessary tools to ensure the fairness, ethical soundness and legal compliance of your AI system. Our AI governance platform provides your organisation with auditing and assessment programmes to help you operate and innovate AI responsibly.

Sign up today for more updates, or take advantage of our course offering in collaboration with the Said Business School at the University of Oxford for in-depth training about safe, lawful, and ethical AI.

More on AI regulation

NY Rule Automated Employment Decision Tools

The Core Principles of Trustworthy AI